Details of the model. I'm checking the QMRF documentation. If you don't know what I'm talking about, see my previous post 'Dissecting an AI model (data)' The second principle in the OECD QSAR validation guideline is to accurately describe the algorithm of the model. This part describes how the model was developed and how it works. According to the document, the model can only give three possible answers: hepatotoxic / non-hepatotoxic / no prediction. No matter what structure is entered, the model will give three answers. The model is a decision tree, which is a model that categorizes based on structural alerts (SAs). Structural alerts are structural patterns in chemicals. If a structural pattern is found, it is classified as the label of SA. If no pattern is found? It says unknown. What if multiple patterns are found for a substance?? If more hepatotoxic patterns are found, it is toxic to liver, and vice versa.

What are the structural patterns? Continue reading the QMRF documentation to learn more. The model uses 11 total liver toxicity patterns and 2 non-liver toxicity patterns to make the classification, and if none of the 13 patterns match, the prediction is “unknown”. Models that categorize based on these patterns are called rule-based models. They are sometimes called expert systems. This is because experts usually define these patterns. The idea is to use this information to develop a system that can classify whether a substance is toxic or not.

So, the liver toxicity model in VEGA is based on 13 patterns to determine the final result. What about the substances that do not match to 13 patterns? No idea. We need to look into more information to check. Let's take a look at our previous prediction for benzoic acid again. The prediction result is 'Model prediction is Unknown'. This means that none of the 13 patterns matched the substructure of benzoic acid. Instead, the model determined that it is not toxic because the benzoic acid data was already present in the data.

One fundamental question here: Is this reall an AI...? It's so simple... Didn't you say it is an "AI" predicting liver toxicity? The definition of artificial intelligence is broader than you might think. According to Wikipedia, it's a computer system with capabilities that mimic human intelligence. That's pretty broad. Are expert systems mimicking human intelligence? Yes, they are. When experts look at a substance and determine whether it is toxic, they look at its chemical structure, analyze it for certain patterns, and make predictions. An expert system is a computerized implementation of this approach. That's why it falls under the category of artificial intelligence.

When people think of AI these days, it seems that quite many immediately think of ChatGPT. Couldn't ChatGPT also tell us this much about toxicity of benzoic acid? I asked about the liver toxicity of benzoic acid using the o1-preview model in ChatGPT, and it gave me pretty accurate information. ChatGPT can explain about benzoic acid quite well because there are a lot of information available. What if it's a new substance with little information? The ability to interpret the structure of the substance is important. (I also tested this with my co-pilot, and the results were not good... If the prompt says it is hepatotoxic, explain why it is hepatotoxic. If the prompt is reversed and says it is not hepatotoxic, explain why it is not hepatotoxic...)

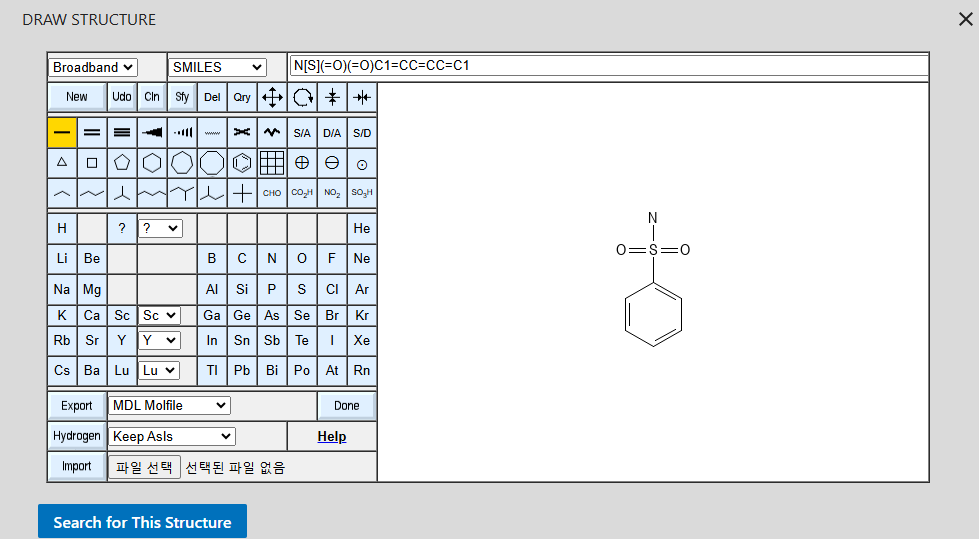

What about o1-preview's ability to analyze structure? I asked a question about one of the structural alert patterns in the model. This is pattern 2. For reference, the pattern NS(=O)(=O)c1ccccc1 looks like this

My prompt: what is 2D structure of NS(=O)(=O)c1ccccc1?

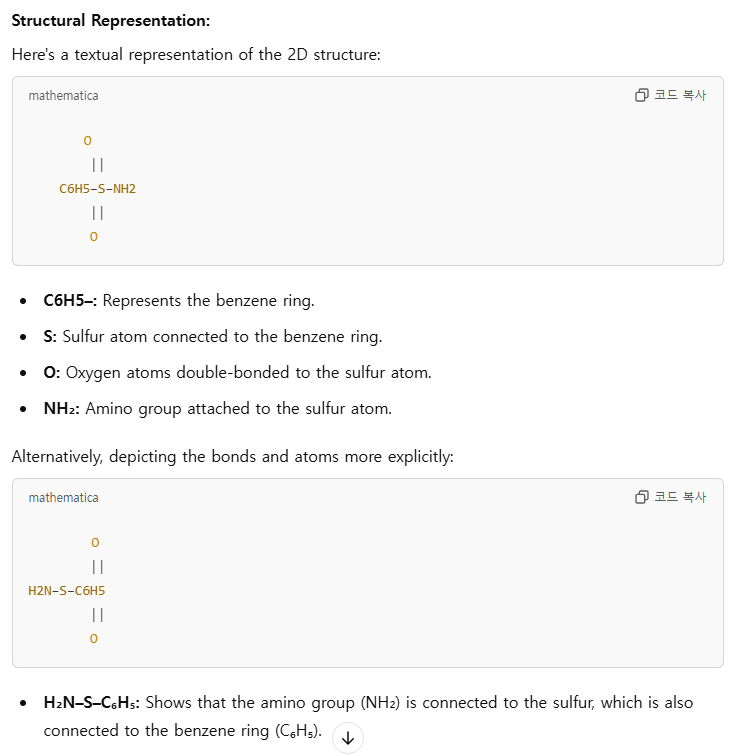

It provides pretty accurate information, but when it comes to structure part, the answer seems a bit unstable. It suggests two structure. The second one linked oxygens in the wrong place. The model was improved very much yet, it stumbles for structural information. I believe this is the reason why we still need expert system.

ChatGPT is a language model. So it is doing well for finding relevant information. Nowadays, with the advent of multimodal, the model is being developed to handle images and videos as well. The smiles code is a string, but it is difficult to properly express the chemical properties of the molecular structure by smiles code alone. Agent systems have recently emerged. What if ChatGPT uses an expert system as a tool to provide answers? It can provide answers based on the chemical knowledge of experts. It is likely to produce high-quality answers, which means that expert systems can contribute to improving the performance of the language model. The predictions of rule-based models or expert systems are highly reliable because they are based on the knowledge of experts, and the interpretation of the predictions is clear. Reliable information is often more meaningful than uncertain answers. This is why it is still a popular method in practice.

'AI & Chemistry' 카테고리의 다른 글

| How accurate is AI? (0) | 2024.11.19 |

|---|---|

| Is it really safe if AI says it is safe? (0) | 2024.11.18 |

| Dissecting an AI model (data) (2) | 2024.11.16 |

| AI predicts liver toxiciy of food preservatives (1) | 2024.11.15 |

| Food preservatives are safe? (0) | 2024.11.13 |